Optical localization system

Automatic localization of arbitrary moving targets is an essential system component in multiple domains, such as air traffic control, robotic workspaces or surveillance and defense systems. If the sensory data measured by the target are available, it is straightforward to derive its location. There are scenarios, however, were the target is unable (malfunctioning aircraft) or reluctant (UAV intruder) to expose its location. Then the localization estimation system is left with its own observations. Nowadays, radars are the most widely used devices for localizing distant targets. However, they suffer from being unportable and energy-intensive and furthermore, in defense applications it is not desirable that the tracked object would find out that it is being tracked. That is the condition which actively radiating systems, such as radars, cannot achieve. Therefore, we developed a semi-autonomous passive multi-camera system for tracking and localizing the distant objects, which is based merely on RGB cameras.

System overview

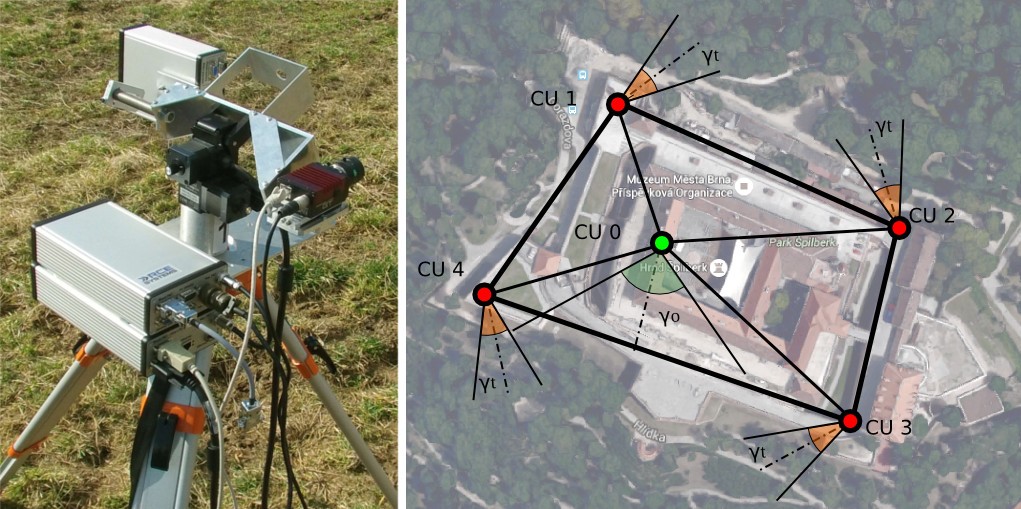

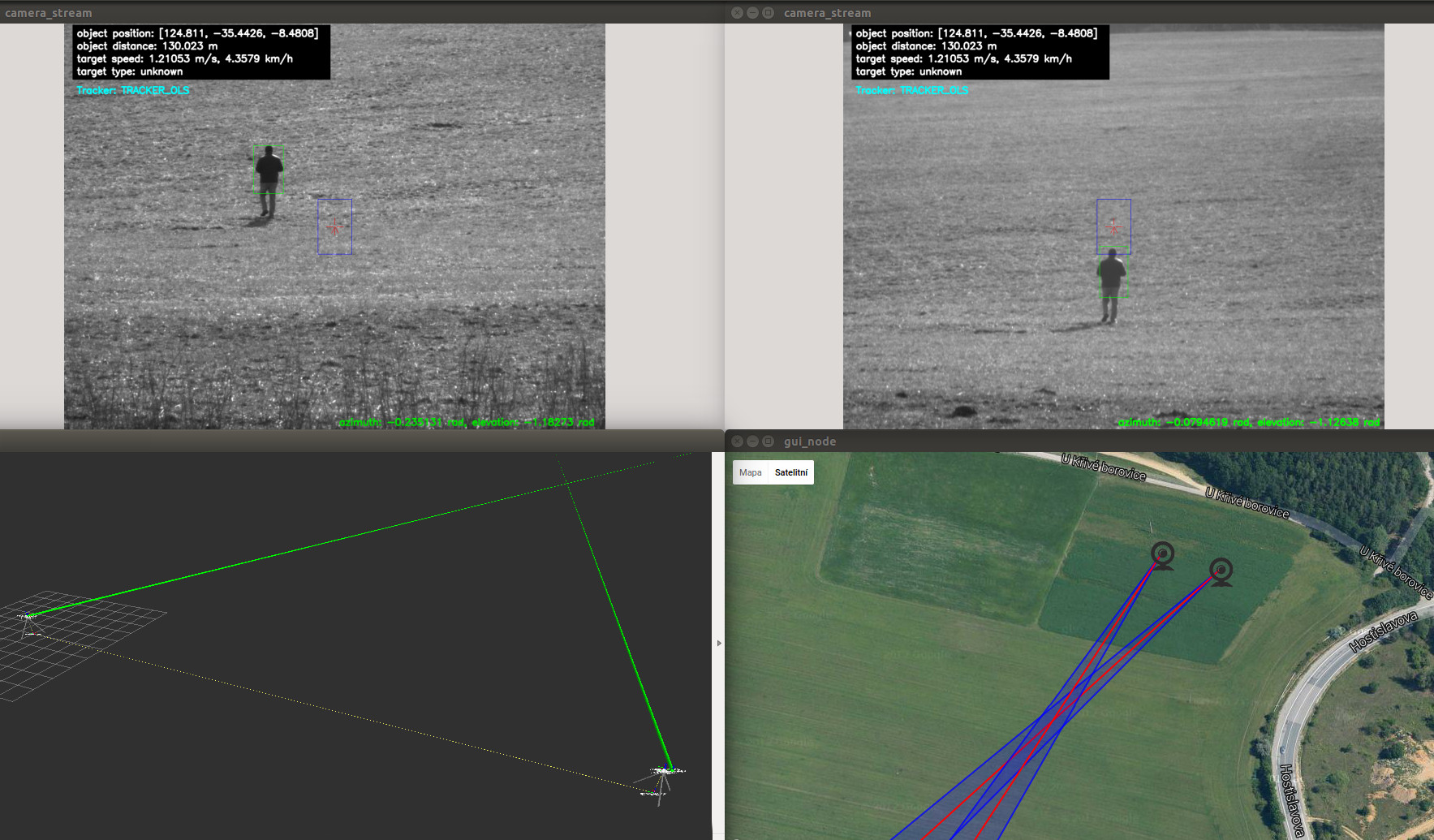

The main building block of the system is a camera unit, an independent collection of hardware modules including positionable camera and various sensors used for estimating the geographical coordinates of the unit itself. The system is designed to work with an arbitrary number of camera units which are positioned so as to cover the protected region. The detection of the target is performed in the man-in-the-loop manner, while the visual tracking is automatic and it is based on two state-of-the-art approaches. The estimation of the target location in 3D-space is based on multi-view triangulation working with noisy measurements. The system displays the estimated global geographical location of the target in the underlying map and it continuously outputs corresponding UTM coordinates. The cameras were calibrated and stationed using custom designed calibration targets and methodology with the objective to alleviate the main sources of errors.

Advantage of using multiple camera units

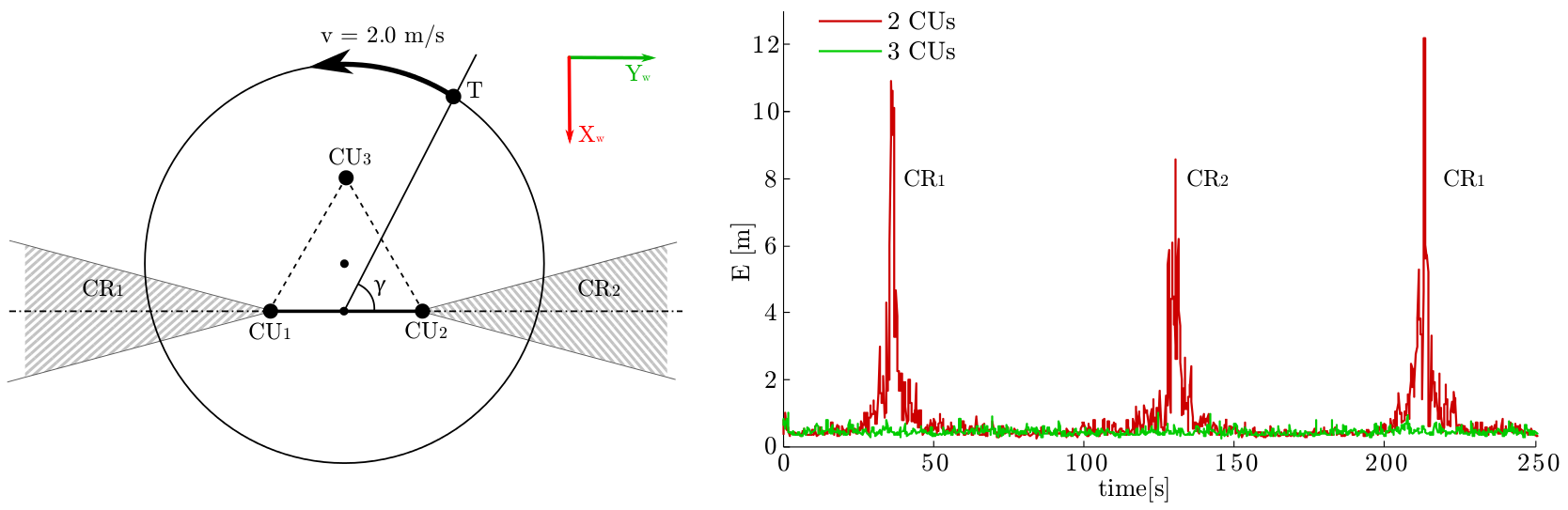

The main strength of the system is the fact it is designed to work with an arbitrary number of camera units, therefore the estimated position of the target can be refined by incorporating multiple hypotheses. It is straightforward to show that using mere two camera units might result in significant localization errors as compared to the three camera units topology. If we assume a two-camera scenario where the target moves along the circle there are two cone-shaped critical regions (CR) where the localization error increases. On the other hand, when one camera unit is added no critical regions could be found anymore and the localization error is minimized.

Hardware

The camera unit consists of a surveying tripod, a P&T unit Flir PTU-D46-70, a camera Prosilica GT 1290C (RGB, 1280×960 px, 33.3 FPS) and a desktop PC with the following hardware parameters: CPU Intel Core i5 4590 @ 3.3 Ghz x 4, GPU Nvidia GeForce GTX 760, 4 GB RAM.

Software

The system is based on the optimized C++ implementation utilizing ROS (Robot Operating System) framework and physical simulator Gazebo where certain demanding parts of the visual tracking are accelerated on GPU using CUDA framework.

Video

Applications

The system is designed to suit mobility and temporary deployment. The modularity of the system allows for custom scaling and fast deployment in a wide range of scenarios including perimeter monitoring or early threat detection in defense systems, as well as air traffic control in public space.

Features

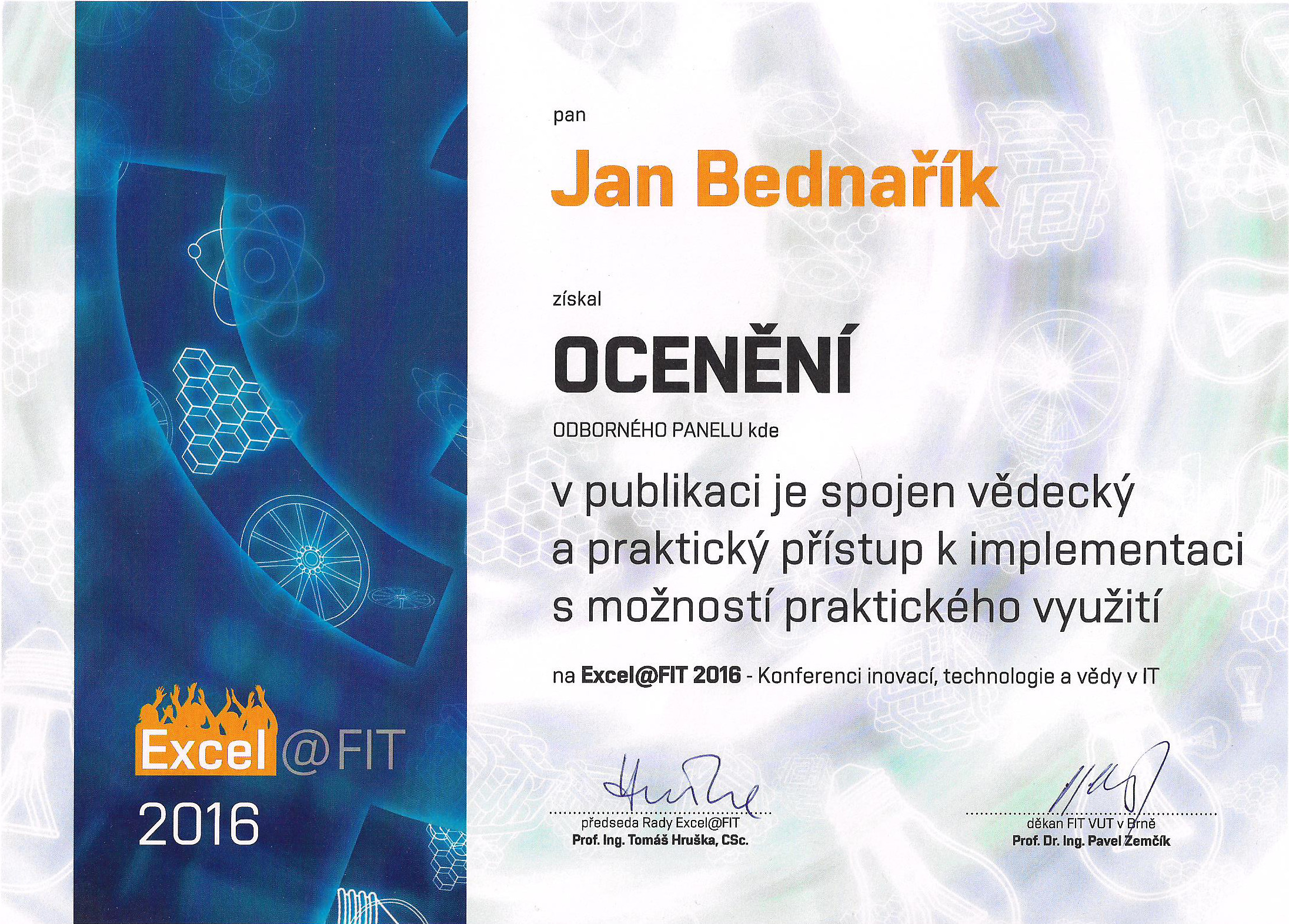

Awards

The system was presented at a conference Excel@FIT 2016 – Student competition conference of innovations, technology and science in IT which was held by the Faculty of Information Technology, Brno University of Technology on 4th May 2016. The project was awarded two prizes from expert community and from expert panel for combining the scientific and practical approach and implementing a real world product.